Particle detectors measure different particle properties at colliders such as the Large Hadron Collider (LHC). More specifically, calorimeters are key components of the four LHC detectors, which are responsible for measuring the energy of the particles. In the Large Hadron Collider, two high energy particle beams travel at close to the speed of light in opposite directions, which then are made to collide at four locations around the collider ring. The locations are the positions of the four experiments, where the four biggest particle detectors reside, [ATLAS](https://home.web.cern.

This secondary particle creation is a complex stochastic process which is then typically modeled using Monte Carlo (MC) techniques. These simulations have a crucial role in High Energy Physics (HEP) experiments, and at the same time are very resource-intensive from a computing perspective: recent estimates show that the HEP community devotes more than 50% of the WLCG computing Grid (which has a total of 1.4 million CPU cores running 24/7/365 worldwide) to simulation-related tasks.

Moreover, detector simulations are constrained by the need for accuracy, which will further increase, in the near future with the High Luminosity upgrade of the LHC (HL-LHC) , which will increase the complexity of the associated detector data. As a consequence, HL-LHC will increase the demand in terms of simulations, and consequently the need for computing resources.

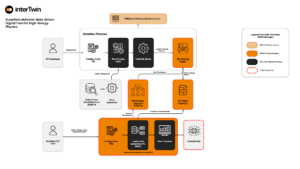

CERN’s interTwin use case concerns a fast particle detector simulation paradigm using Generative Adversarial Networks (GANs). This use case incorporates a convolutional GAN, which we call 3DGAN, as calorimeter detectors can be regarded as huge cameras taking 3D pictures of particle interactions. The three-dimensional calorimeter cells are generated as monochromatic pixelated images with pixel intensities representing the cell energy depositions. A prototype of the proposed 3DGAN approach has already been developed.

Monte Carlo simulations are essential in HEP data analysis and detector design. A particle detector data-driven DT that accelerates simulations will add value in future HEP experiments, impacting many more domains, such as nuclear medicine and astrophysics.